what do people use discord for exactly?

Too much.

It’s a chat platform geared towards gamers, with voice chat, screen sharing, and streaming options… that’s been coopted by vloggers… but most unsettlingly, it’s being used for customer support and documentation.

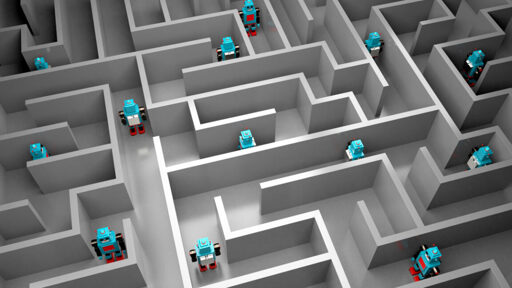

A lot of knowledge bases are buried in the walled garden of servers, and a labyrinth of chat rooms.

The thing is, I keep them there as a “last resort”, not as main use.